Artificial intelligence technology is developing rapidly, and it is becoming more and more popular. Now as long as you can access the Internet and typing, anyone can immediately "write" various copywriting and generate realistic images.Although this technology has brought many convenience to life, it also aroused more concerns, especially when relevant regulatory regulations of various countries have not yet been in place.This year, dozens of countries will hold a general election. Experts warn that it is becoming more and more difficult to distinguish the depth of authenticity (DeepFake) forgery content or triggering a "false information tsunami" to impact the elections and democracy of various countries.

Before the Slovaka election of the China -Europe State in September last year, a pro -European candidate Michal Simecka appeared on social media.The competition in this election is fierce. The fake recording with artificial intelligence (AI) technology is unknown to how much impact it has on voters' voting intentions.

In January of this year, a few days before the US Democratic Party held in the preliminary election in New Hampshire, many people received the Robocall call from the machine, and "hearing" the US President Biden called on them not to participate in the preliminary vote.A magician in New Orleans exploded to the media after passing. He spent only $ 1 and this fake recording in less than 20 minutes.Klemer played for the opponent of the Bayndon Party at the time, but the Philips's campaign team claimed that he was unaware of it.

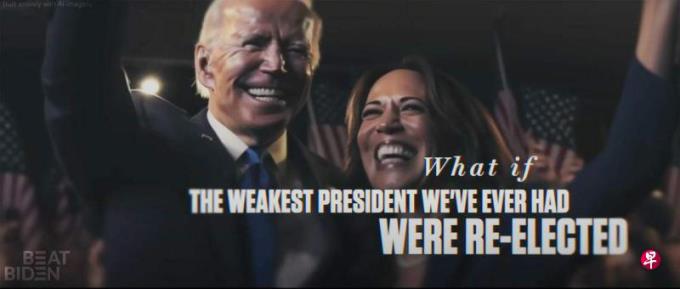

According to the British Broadcasting Corporation (BBC), Bayeon's opponent and supporters of former US President Trump are using AI technology to generate a photo of Trump and African voters to encourage black voters to vote.give him.

Recently, in the campaign activities of Pakistan, India, Indonesia and other countries, you can also see candidates using AI to assist elections or have been attacked by black materials made by AI.

Human recognition AI generating voice accuracy is only 73%

The results released by the University of London last August showed that no matter how the training of AI content was recognized, the accuracy of human recognition AI generated voice was only 73%.The voice samples of this study are generated by relatively old AI tools. With the improvement of AI technology simulation, human recognition success rates are expected to be lower.

Another survey released by the National University of Australia last November also found that those who were deceived by AI's faces often have the most confident judgment.This is worrying that false information may bring greater impact on elections and democratic systems of various countries with AI assistance.

George Washington University led and a research report released in January this year predicts that in the middle of this year, the poor AI activity with poor motivation will become daily incidents.Professor Barry O’Sullivan, a professor at the University of Cock, predicts that there will be a lot of evidence that some elections will be affected by AI propaganda data by 2025 or before.

The future problem and technical researcher Karryl Kim Sagun Trajano of Nanyang University of Science and Technology, Singapore, pointed out that AI did not doubt the risk and threat to the election when receiving an email interview with the Morning Post.Especially in the era of "Truth Decay"."The truth declines to breed the public's distrust and incite conspiracy theory. The erosion of this trust may have terrible consequences, such as voters are susceptible to false information, voters suppression, and voters indifferent.This may eventually cause voters to lose trust in the government. "

Fake news is of course not new, but the combination of the generation AI with the social media network is combined, and the false news will be added.

Research: Raise or destroy real video trust in deep pseudo content

Zeve Sanderson, Executive Director of the Social Media and Political Center of New York University, told Lianhe Zaobao that the past 10 years of research showed that people's concerns about fake information votes may be exaggerated.Fake information only accounts for a small part of the media information contacted by ordinary people, and is mainly concentrated in a small number of population groups.In the United States, most voters have made a decision before the vote, and it is unlikely to temporarily change their ideas.

However, AI can indeed make false news more common, more convincing, more targeted and difficult to discover.Sandon believes: "Compared to whether there will be more (AI generated fake information), the bigger question is whether there will be more people see (these fake information)."

He pointed out that the traditional social media platform is built on the so -called social diagram. What the user sees the content circulating in their social circle. The later Tiktok will be based on algorithm.Push them outside."This approach is spreading to other platforms. As AI makes it easier for fake videos to make, TIKTOK users may receive false political information that will not be seen on other platforms."

A research report released in October last year found that as people's awareness of deep pseudo content increased, this could destroy their trust in real videos.Experts are worried that with the continuous improvement of deep pseudo technology, people may no longer distinguish between true and false, and even no longer believe in the facts; those who really do what they should not do or say what they should not say can also use thisAs an excuse for Kaishi.

Sandon's 2016 Russia was accused of intervening in the US election as an example. The research showed that the impact of the matter on voters was minimal, but relevant media reports and discussions had an indirect impact, making people pay attention to the fragility of American democracy, and even evenStart questioning the election results.

He said that deep -pseudo content indirectly led people to lose confidence in the political system and information ecosystem.People's concerns about AI "will encourage the so -called liaar’ s pidnd, that is, using people's views on the environment with errors in the environment, and telling real information as fake. "

For example, in lawsuits such as Tesla's autonomous vehicles and riots in the Mountains of Congress on January 6, the defense can be seen that the defense claims that the evidence of the presence of the hall is deep and fake.Sandon said: "It is not difficult to imagine that this year, there may be candidates after the outbreak of the 'hot microphone' incident, andVideo is fake."The hot microphone refers to the broadcast because the microphone has not been closed.

Save money and effective AI to become a good campaign

If you use it properly, AI can help candidates understand what issues and publicity copywriting is written, so as to save a lot of manpower and costs in the campaign of burning money.In the Indonesian presidential election held in February, the Prabovo campaign team, which was far -reaching in sampling, used AI technology to promote it, including the cute cartoon image to shape this iron -handed general into an approachable grandfather.

">/>

">/>

India, which is about to hold a national election, has become a business.A local political adviser estimates that the market size of India and AI -related election activities, including voice calls and newsletters, virtual body creation, and multi -language media posts, etc., will reach $ 60 million (about 8064Wan Xinyuan).Some experts also believe that AI is expected to promote the electronic process of India's election and change the long -lasting and great current situation of Indian elections.

Saifuddin Ahmed, Assistant Professor of the School of Polytechnic University of Science and Technology, said in an interview with Lianhe Morning Post that politicians are now increasingly aware that social media as the main part of all social groupsThe importance of political information sources.In political propaganda activities, the use of AI generation and deep pseudo content, especially the new practices of spreading these contents through social media platforms, can bring them unique advantages.In addition, the use of informal political languages and narratives in deep pseudo content can make them look more affordable.

He said: "To attract people who are not interested through traditional campaign methods, such as younger voters, talking about complex political issues with them is a challenge. With deep pseudo -technology, politicians can spread easier and more attractive.The content of people makes politics closer to these people in an innovative way. "

Suhado's "resurrection" voting method triggered moral controversy

Savin mentioned that although there is no precedent, political parties and candidates can also use the content generated by AI to provide voters with an immersive sensory experience to show their ability and the differences with their opponents.He emphasized that although the use of AI in the campaign is designed to attract the attention of voters, the ultimate goal should gradually transform this interaction with voters into a true interest and participation in politics.

However, in the absence of relevant regulatory measures and regulations, AI will inevitably be abused.During the Indonesian election, the Gorca Party used deep -fake technology to "resurrect" former President Suhado, and borrowed AI Suhado's mouth.This approach triggered moral disputes and question that may affect the fairness of the election.

Divyndra Singh Jadoun, the owner of the Indian AI startup THE Indian DeepFaker, told Peninsula TV that he has received the "immoral requirements" of many campaign teams in recent months and asked him to make false content including pornographic content.Video and audio to attack political opponents.Jia Dun always rejected these requirements, but according to his understanding, there are many people who are willing to pick up this kind of work, and the price is very low.

He said: "No political party believes that using AI to manipulate voters is a crime. This is just part of the campaign strategy."

The threat of AI requires the whole society to work hard

The multinational governments and the science and technology community have begun to discuss how to better supervise AI technology, but to effectively respond to the threat of AI for elections, the entire society will work together.

Saifin pointed out in an interview that it is important to introduce a complete legal framework and states that the regulations of using AI responsibly in political campaign are critical.He mentioned that the South Korean Congress revisited the law earlier to prohibit the political campaign videos made by spreading deep -pseudo -technology production 90 days before the election.

Other countries are also exploring how to supervise the use of AI.U.S. President Biden signed an administrative order last October to formulate new security standards for AI, including key information such as required leading AI system R & D personnel to share security test results, improve relevant standards and test tools.In 2023, at least 15 states in the United States passed the relevant legislation or resolutions used by regulatory AI, but the possibility of the US Congress with severe division of the party before the November election is unlikely.Essence

Sandon suggested that the government should support research on social and behavioral consequences caused by AI, not just focusing on technical solutions.They can also invest in effective methods after practice to improve the public's "resistance" of the error information generated by AI, and legislative stipulates that social media platforms must share data with independent researchers.

Establishing trust and strengthening security social media platforms must invest more resources

In the industry, on February 16th, 20 technology industry giants including Openai, Microsoft, Amazon, Google, Tiktok, IBM, META, X, and other 20 technology industry giants signed a blow to the use of artificial intelligence in the 2024 election to use artificial intelligence.Technology Agreement.These companies did not promise to prohibit or remove deep -fake content, but they agreed to take reasonable measures to detect and label the content produced or published by their platforms.

Experts welcome this, but it means that technology companies must do more.Some of the infamous online platforms that are notorious due to the flooding of false news have not signed this agreement, which weakens the results of the agreement. Critics have questioned whether companies with benefits first can fulfill their promises.

Sandon pointed out that the social media platform must invest more resources in establishing trust and strengthening security, but the actual situation is the opposite.Recently, X and Meta not only laid off the layoffs, but also canceled some policies implemented in the past to maintain the fairness of the election.

Sanderson calls on policy makers and enterprises to implement measures that they take in order to prevent abuse of AI during this election year, so as to slow down the application of AI technology in elections and let everyone have time to learn more about AI's potential to understand the potential of AI.Influence.He also hopes that the media can clearly explain the potential risks brought by AI through a detailed reportAvoid dweller or anti -Utopia -type hype circles around this new technology.

Experts are worried that the new AI tools under development or to be developed will bring more unknown risks next."We see that there are new AI tools to develop every month ... whether the protection measures to prevent abuse of the abuse of AI depends not only on the ability of various institutions to implement strong security measures for AI related threats.Consciousness and digital literacy for the public.

Only after the government, industry and individuals take sufficient measures, can AI threats can be effectively curbed."Industry participants should set up guardrails to prevent AI from spreading the spread of false information. Scholars, media practitioners and information professionals should work together to improve information and media literacy, especially AI literacy.At the same time, leave room for election reform "

"In any case, AI technology is rapidly becoming part of people's lives.German Fraunhof Applied Institute of Integrated Safety Research Scientist Nicolas Müller, in an interview with the Financial Times, said: "This technology will continue to exist and will get better and better.Audio or video and other media ... We may have to coexist with it, just like coexisting with crown diseases. "