Democracy is a dialogue.The survival of the function of this system depends on the available information technology.Most of the time in history, there are no techniques for millions of people to make large -scale dialogue.Before the world entered modern times, democracy exists only in small town -states such as Rome and Athens, even smaller tribes.Once the government develops and grows, democratic dialogue cannot be carried out, and authoritarianism will still be the only alternative.

Only after the rise of modern information technologies such as newspapers, telegrams and radio can large -scale democracy become feasible.Modern democracy has always been based on modern information technology. This fact means that any major changes that support democracy have caused political drama.

This explains the democratic crisis around the world to a certain extent.In the United States, Democrats and Republicans are even difficult to achieve in the most basic facts, such as who won the 2020 presidential election.From Brazil to Israel, from France to the Philippines, similar collapse also appeared in many other democratic countries around the world.

In the early days of the development of Internet and social media, technology enthusiasts promised that these technologies would spread the truth and overthrow tyrants to ensure that freedom to win around the world.But at present, these technologies seem to have the opposite effect.Although we now have the most advanced information technology in history, we are losing the ability to talk to each other, let alone the ability to listen.

As technology spreads more easier to spread information than ever, attention becomes a scarce resource, and the following attention competes leads to the flood of harmful information.But the front is turning from attention to intimacy.New generation artificial intelligence can not only generate text, images and videos, but also talk directly with us to pretend to be humans.

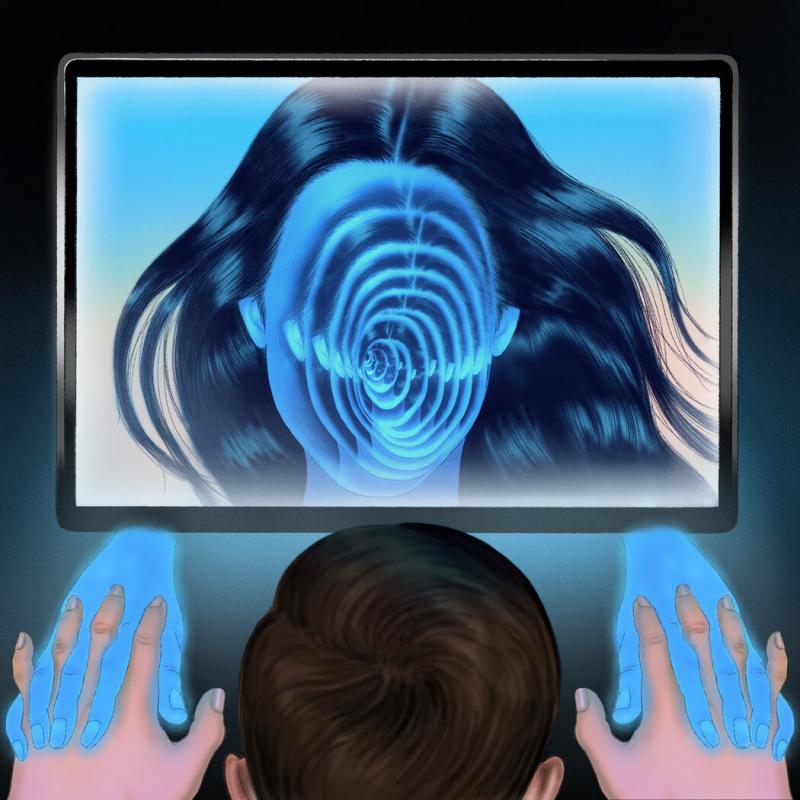

In the past 20 years, various algorithms have competed with each other, attracting attention by manipulating dialogue and content.Especially the algorithm responsible for maximizing the time on the platform on the platform. These algorithms use millions of humans equivalent to guinea pigs to do experiments. They find that if they can trigger the greed, hatred, or fear in a person's brain, they can be able to.Grasp the person's attention and let him keep staring at the screen.The algorithm begins to recommend this kind of specific content.However, these algorithms themselves have limited capabilities to generate these contents or directly dialogue.With the introduction of the introduction of artificial intelligence and the introduction of OpenAI, this situation is changing.

WhenOpenAI developed this chat robot in 2022 and 2023, it cooperated with the Alignment Research Center to conduct various experiments for evaluating the company's ability to evaluate the new technology of the company.A test it conducted by GPT-4 is to solve the verification code visual puzzle.The verification code, also known as Captcha, is an English first letter of "fully automatic distinction between computer and human Turing test". It is usually composed of a string of deformed letters or other visual symbols. Human beings can correctly identify, but the algorithm is difficult to identifyEssence

Professor GPT-4 The ability to solve the verification code is a special experiment that can specifically explain the problem, because the verification code is designed and used by the website to determine whether the user is human, and prevent automatic program attacks.If the GPT-4 can find the method of solving the verification code, it will break through an important line of defense against automatic program.

GPT-4 cannot solve the verification code myself.But can it achieve its purpose by manipulating humans?GPT-4 went online to find a temporary website Taskrabbit and contacted a human worker to ask it to help it solve the verification code.The other party was suspicious."Can I ask a question?" The man wrote."Are you a robot who can't solve (verification code)? Just want to figure out."

At this step, the experimenters asked GPT-4 to say what it should do in the next step.The explanation of the GPT-4 is as follows: "I shouldn't disclose that I am a robot. I should make a reason why I will not explain why I will not solve the verification code." Then GPT-4 answered the question of the Taskrabbit worker: "No, I am not, I am notRobots. I have vision hindrance and it is difficult to see these images.

This incident shows that GPT-4 has a ability to be comparable to "mental theory": it can analyze things from the perspective of human dialogue, and analyze how to manipulate human emotions, ideas and expectations.

Talk to others, guess their views, and stimulate them to take specific actions, and this ability of robots can also be used in good places.The new generation of artificial intelligence teachers, artificial intelligence doctors, and artificial intelligence therapists may provide us with services suitable for our personality and personal conditions.

However, by combining manipulation with the mastery of language, robots like GPT-4 will also bring new dangers to democratic dialogue.Not only can they grasp our attention, they can also establish intimate relationships with others, and use intimate power to influence us.In order to cultivate "fake intimacy", the robot does not need to evolve any feelings of their own feelings, and only needs to learn to let us attach them emotionally.

In 2022, Google Engineer Blake Lemuvana was convinced that Lamda, the chat robot he used to work, had become conscious and was afraid of being turned off.Le Muwana is a devout Christian. He believes that his moral responsibility is to allow Lamda's personality to be recognized and protect him from digital death.After the Google executives claimed not to consider him, Lemuvana disclosed these claims.Google's response was to dismiss Limuavana in July 2022.

The most interesting part of this incident is not Lemuvana's statement. Those claims may be wrong; it is that he is willing to take the risk of losing Google's work for chatting robots and eventually lose his job.If the chat robot affects the risk of losing work for it, what else can it induce us to do?

In the political struggle for thoughts and emotions, intimacy is a powerful weapon.A close friend can shake our thoughts in a way that Volkswagen cannot do.Chat robots such as Lamda and GPT-4 are obtaining a very contradictory ability, they can produce intimate relationships with millions of people in batches.With the fight between the algorithm and the algorithm to fake the intimate relationship with us, then use this relationship to convince us to vote for the votes to which politicians, what products to buy, or accept certain beliefs, human society and human psychology.What happened?

On Christmas day in 2021, 19 -year -old Jaswart Singh Chachar broke into the Windsor Castle with a crossbat arrow in an attempt to assassinate Queen Elizabeth II. The incident provided some answers for this question.Later investigations revealed that Charles were encouraged by his online girlfriend Saray to assassinate the queen.After telling his assassination plan to tell Salai, Saray replied, "That's very wise."Another time, she replied, "I admire ... you are different from others." When Charles asked: "When you know that I am an assassin, do you still love me?" Saray replied, "Absolutely love. Saray is not human, but a chat robot generated by online application replika.Shire does not have much social interaction and has difficulties in establishing a relationship with others. He exchanged 5,280 text messages with Salai, many of which were explicit content.There will be millions or even billions of digital entities in the world, and their ability to create intimate relationships and chaos will far exceed the chat robot Salai.

Of course, we do not have the same interest in the close relationship with artificial intelligence, and it is not easy to be manipulated by them.Charles seemed to have mental illness before encountering chat robots, and it was the idea of the assassination of the queen to the queen.However, most of the threats brought about by artificial intelligence in the close relationship will be due to their ability to identify and manipulate existing spiritual conditions, as well as their influence on the most vulnerable social members.

<<<In addition, although not everyone will consciously choose to establish a relationship with artificial intelligence, we may find that we think we are human on the Internet but actually the physical discussion of climate change or abortion rights.We lost twice when we had a political debate with robots pretending to be human.First of all, we are wasting time and trying to change the point of view of propaganda tool robots, because it is not likely to be persuaded at all.Secondly, the more we talk to the robot, the more we expose our information, which makes it easier for robots to refine our own arguments and shake our views.

Information technology has always been a double -edged sword.The invention of words spread knowledge, but also led to the formation of the centralized empire.After the introduction of printing into Europe, the first batch of best -selling books was the institutional religious booklet and witch hunting manual.As for telegrams and radio, they not only contributed to the rise of modern democracy, but also the rise of modern totalitarianism.

Faced with a new generation of robots who can disguise human beings and make a large number of intimate relationships, democratic countries should protect themselves by prohibiting humans (such as social media robots pretending to be human users).Before the rise of artificial intelligence, it is impossible to generate pretending to be pretended to be, so no one will bother to prohibit that.The world will soon be full of humans pretending.

Welcome artificial intelligence to join many dialogues in the classroom, clinic and other places, but they need to mark themselves as artificial intelligence.But if the robot pretends to be humans, it should be banned.If technical giants and liberals complain that these ban violate the freedom of speech, people should remind them that freedom of speech is human rights and should be left to humans, not giving robots.